Make Your 100 GPUs Worth 200 with AIM.

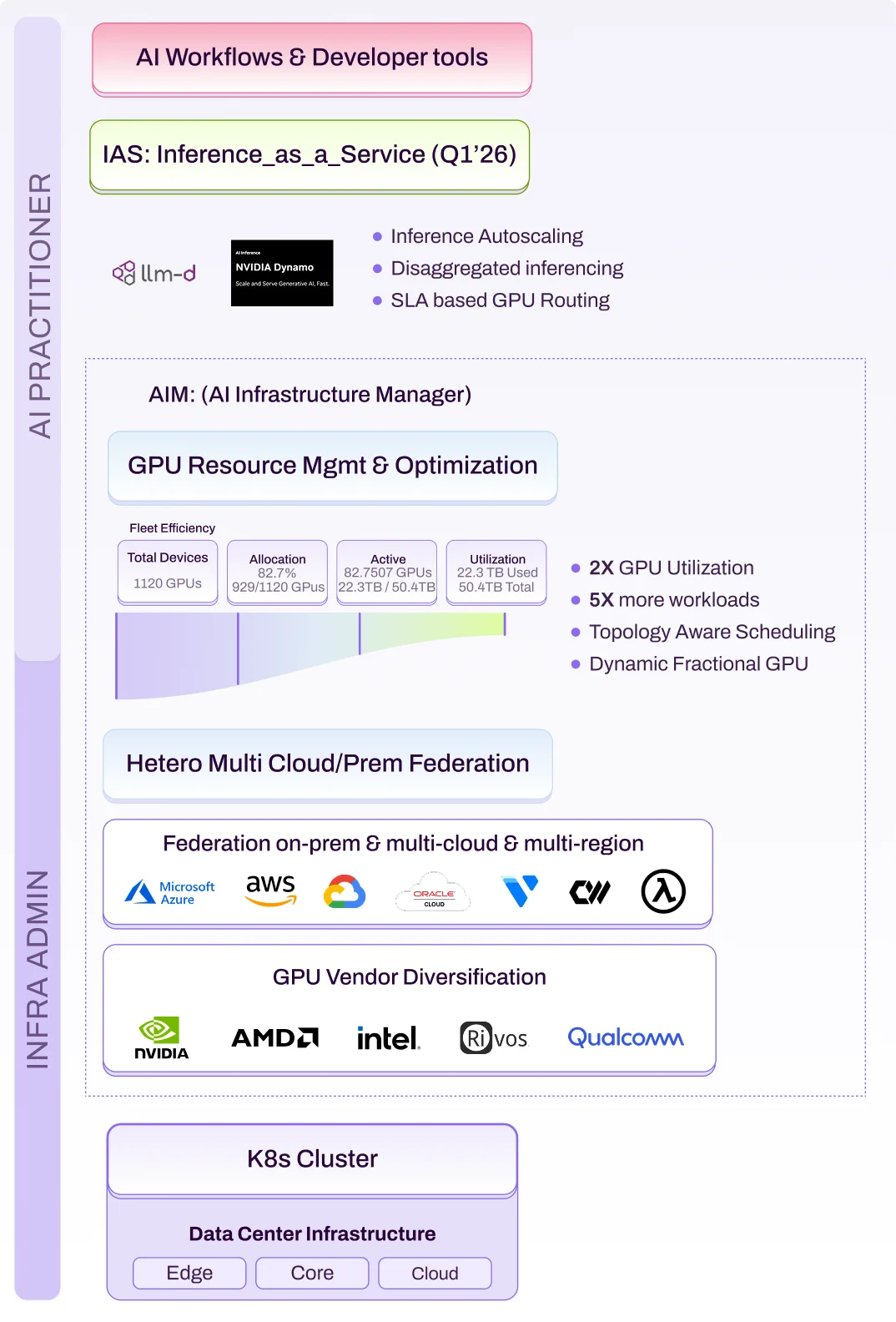

Exostellar automates AI infrastructure scaling across clouds, on-prem, and diverse GPU vendors — doubling capacity, reducing queue times, and increasing tokens per dollar.

Exostellar Enterprise AI Stack Hetero GPU Orch

AIM (AI Infrastructure Management Platform)

Business Value

Strategic Partners

Anush Elangovan

Vice President, AI Software

“Open ecosystems are key to building next-generation AI infrastructure. Together with Exostellar, we’re enabling advanced capabilities like topology-aware scheduling and resource bin-packing on AMD Instinct™ accelerators, helping enterprises maximize GPU efficiency and shorten time to value for AI workloads.”

What You Get Instantly

2x

More GPU Capacity

50%+

GPU Cost Reduction

10x

IT productivity with 95% fewer tickets.

MultiGPU

NV, AMD, Intel & xPUs

Observe everything. Orchestrate intelligently. Optimize automatically.

30 Days Free trial

Installed before lunch, proven by dinner